👋 Hi, I'm

📱 Please use a big screen to read this website as small size hides all artistic images ✨

"Create and build whenever I can"

Perception and action: two sides of the same coin

We perceive in order to act, and we act in order to perceive. — J.J. Gibson

Since the beginning of my research, I've focused on visual planning shaped by the view that perceiving is an aspect of acting. Over the years, my work has spanned a diverse range of areas: from early efforts of designing assistive experiences in AR to developing today's vision-language models that integrate vision, language, and action. Despite these varied applications, the aim remains clear: to create unified perceptual systems that empower both humans and machines to better shape the world together.

Services & Tools

A personal toolkit of research and playful services designed to support academic work with a touch of fun.

掐指能算半边天

Traditional Chinese fortune-telling algorithms with fun, relaxed predictions.

Perceptual Copilot

An experimental prototype that integrates OpenAI agents with visual tools to process real-time video streams.

Re:Read

More insights with less effort

Uptime

Continuous monitoring of jing.vision service uptime.

LangBind

Language binding and integration service for multimodal applications.

Re:Search

Re:Search on the optimized path

Pouchi

Discover and Share the Ideas that Matter from Research to Creation

Research & Projects

A collection of projects featuring research with artistic cover images and demos focused on real-world system design and applications.

Perceptual Copilot

An experimental prototype that integrates OpenAI agents with visual tools to process real-time video streams.

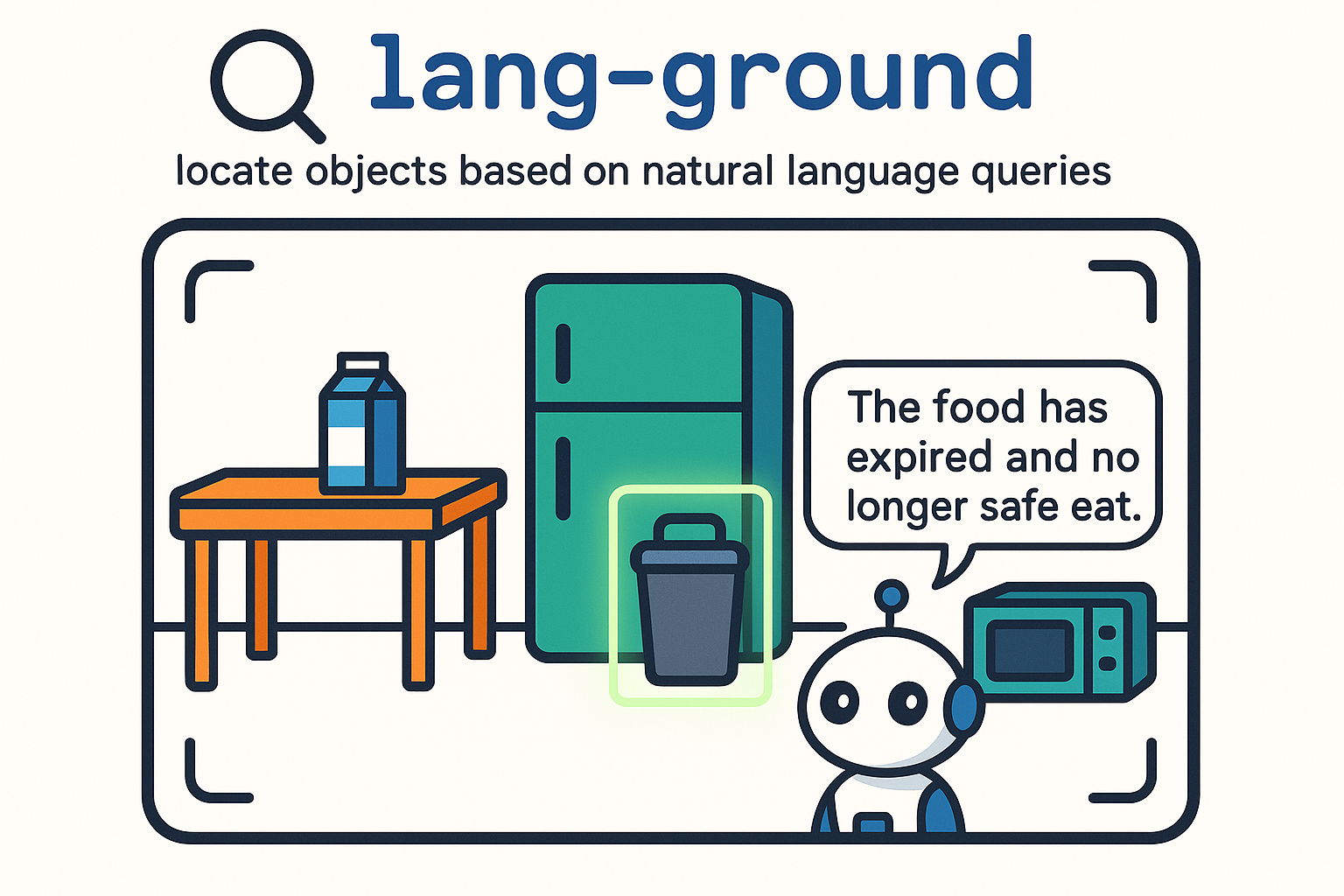

Language Grounding

Use natural language to localize and track objects in real-time with advanced computer vision.

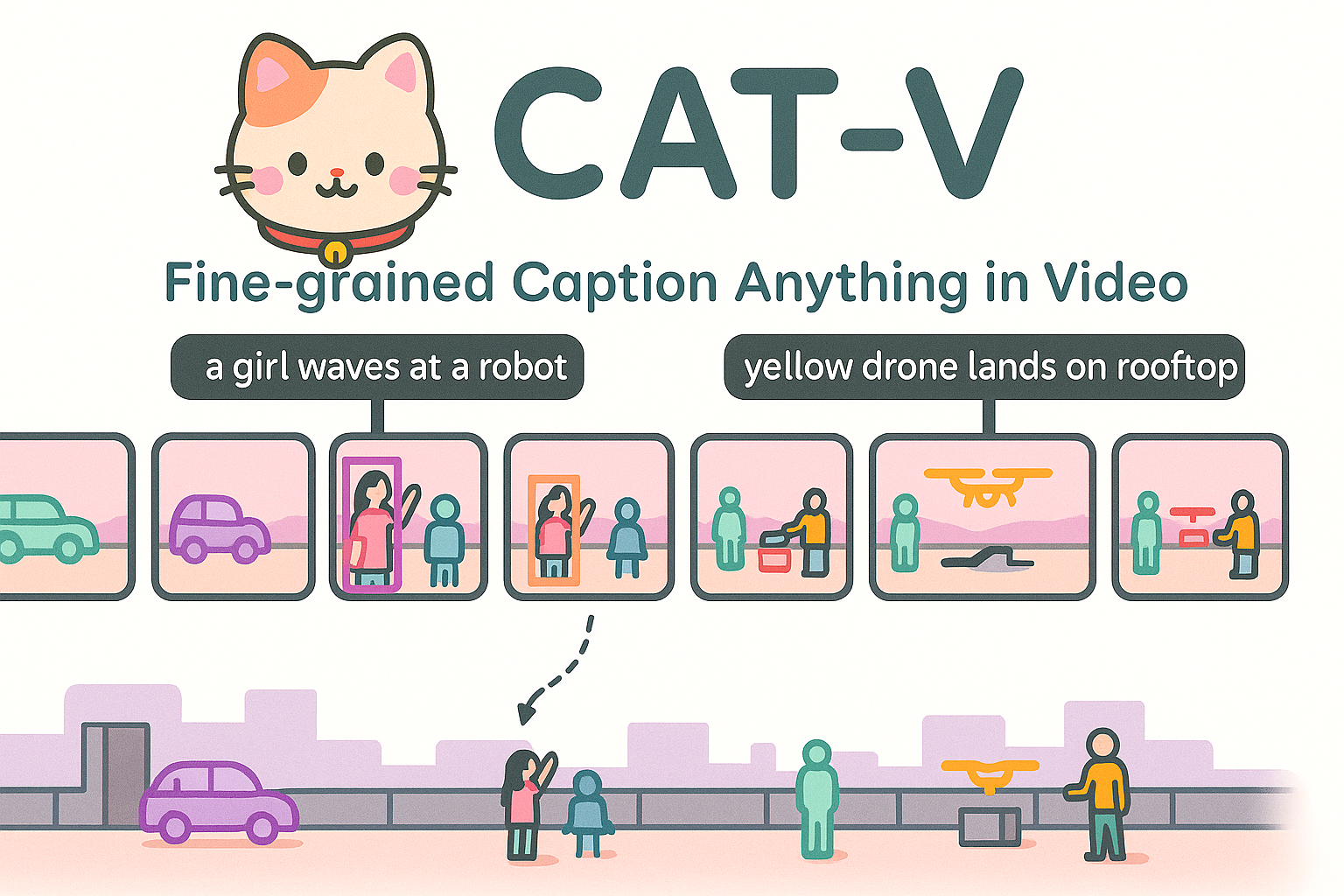

CAT-V

A comprehensive computer vision toolkit for advanced image and video analysis with state-of-the-art algorithms and processing capabilities.

Streamem

A streaming application platform for real-time content delivery and management with modern web technologies.

AR AI Assistant

An advanced AI research and implementation platform showcasing cutting-edge artificial intelligence techniques and applications.

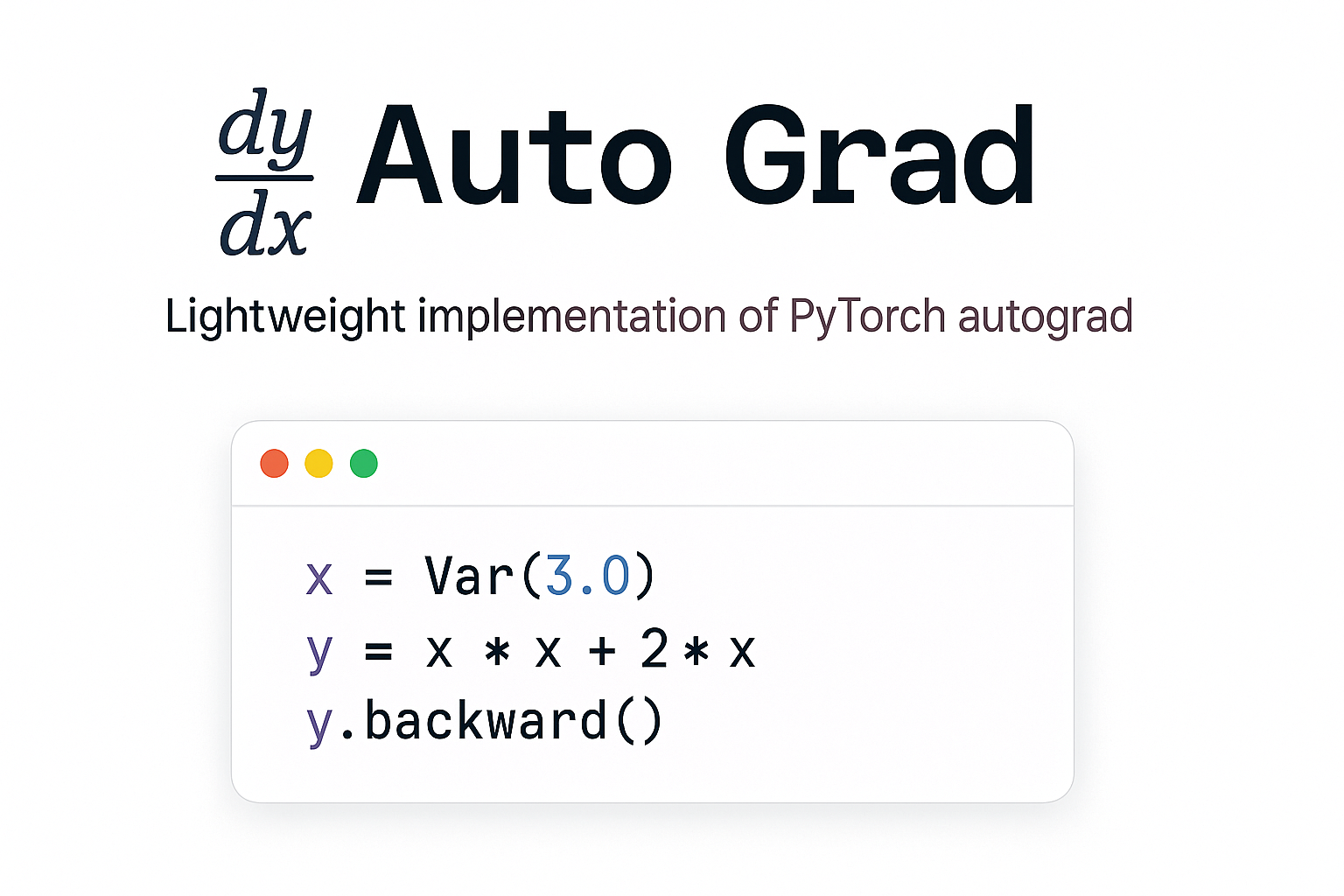

Automatic Differentiation

A repository for exploring and implementing automatic differentiation algorithms, enabling efficient computation of derivatives for scientific computing.

Why Reasoning Matters? A Survey of Advancements in Multimodal Reasoning

Jing Bi, Susan Liang, Xiaofei Zhou, Pinxin Liu, Junjia Guo, Yunlong Tang, Luchuan Song, Chao Huang, Ali Vosoughi, Guangyu Sun, Jinxi He, Jiarui Wu, Shu Yang, Daoan Zhang, Chen Chen, Lianggong Bruce Wen, Zhang Liu, Jiebo Luo, Chenliang Xu

A comprehensive survey examining reasoning techniques in both textual and multimodal LLMs, addressing the challenge of integrating visual and textual inputs while resolving ambiguities across modalities.

VERIFY: A Benchmark of Visual Explanation and Reasoning for Investigating Multimodal Reasoning FidelitY

Jing Bi*, JunJia Guo*, Susan Liang*, Guangyu Sun, Luchuan Song*, Yunlong Tang*, Jinxi He*, Jiarui Wu*, Ali Vosoughi*, Chen Chen, Chenliang Xu*

The first benchmark explicitly designed to assess the reasoning path of MLLMs in visual reasoning tasks with novel metrics that assess reasoning fidelity beyond accuracy.

Unveiling Visual Perception in Language Models: An Attention Head Analysis Approach

Jing Bi, Junjia Guo, Yunlong Tang, Lianggong Bruce Wen, Zhang Liu, Chenliang Xu

Novel attention mechanisms for improving visual perception in deep learning models with focus on spatial and temporal dynamics through attention head analysis.

AVicuna: Audio-Visual Conversation Understanding

Yunlong Tang, Daiki Shimada, Jing Bi, Mingqian Feng, Hang Hua, Chenliang Xu

A multimodal large language model capable of aligning audio-visual events with temporal intervals and text tokens. Built on PU-VALOR dataset with over 114,000 pseudo-untrimmed videos, AVicuna excels in temporal localization and time-aware dialogue capabilities for audio-visual understanding.

OSCaR: Object State Captioning and State Change Representation

Nguyen Nguyen, Jing Bi, Ali Vosoughi, Yapeng Tian, Pooyan Fazli, Chenliang Xu

A comprehensive dataset and benchmark for evaluating multimodal large language models on object state captioning and state change representation. OSCaR consists of 14,084 annotated video segments with nearly 1,000 unique objects from various egocentric video collections, setting a new testbed for understanding dynamic environments and object state changes.

EAGLE: Egocentric AGgregated Language-video Engine

Jing Bi, Yunlong Tang, Luchuan Song, Ali Vosoughi, Nguyen Nguyen, Chenliang Xu

A video-based multimodal large language model fine-tuned for egocentric video content using comprehensive EAGLE-400K dataset comprising 400K visual instruction-tuning data from diverse sources.

Video Understanding with Large Language Models: A Survey

Yunlong Tang, Jing Bi, Siting Xu, Luchuan Song, Susan Liang, Teng Wang, Daoan Zhang, Jie An, Jingyang Lin, Rongyi Zhu, Ali Vosoughi, Chao Huang, Zeliang Zhang, Feng Zheng, Jianguo Zhang, Ping Luo, Jiebo Luo, Chenliang Xu

A comprehensive survey examining the emergent capabilities of Vid-LLMs in video understanding, covering open-ended multi-granularity reasoning and categorizing approaches into three main types: Video Analyzer x LLM, Video Embedder x LLM, and (Analyzer + Embedder) x LLM.

MISAR: A Multimodal Instructional System with Augmented Reality

Jing Bi*, Nguyen Manh Nguyen*, Ali Vosoughi*, Chenliang Xu

An innovative augmented reality system that harnesses LLMs to assimilate information from visual, auditory, and contextual modalities, focusing on task performance quantification in AR through egocentric video, speech, and context analysis.

Procedure Planning in Instructional Videos via Contextual Modeling and Model-based Policy Learning

Jing Bi, Jiebo Luo, Chenliang Xu

Novel approach combining Bayesian Inference and Model-based Imitation Learning to learn goal-directed action planning from instructional videos, capturing both long-term action associations and short-term action separations.

Learning from Interventions Using Hierarchical Policies for Safe Learning

Jing Bi, Vikas Dhiman, Tianyou Xiao, Chenliang Xu

Hierarchical policy framework that addresses expert reaction delay in Learning from Interventions (LfI) through novel backtracking interpolation and sub-goal prediction for safe autonomous learning.

Get in Touch

Always interested in collaborations, and new ideas. Feel free to reach out!